Upload To Digital Ocean Spaces From Your Dang Browser

Spaces is Digital Ocean's answer to AWS S3. The products are almost identical, so much so that the APIs are backwards compatible. This is nice because you can use existing battle hardened packages you might already be familiar with (like aws-sdk-js) to interact with Spaces. It'd also make it easy to migrate if you're sick of Jeff Bezos.

The downside is you wind up using AWS terminology to interact with Digital Ocean tech. It's confusing and although there are a lot of articles out there on how to upload directly to the S3 from the browser it still took me way to long to get it going with Spaces.

tldr;

The gist is, you need to:

- Add a Spaces access key here.

- Configure CORS for your space so your client can communicate with it.

- Have your server generate a short lived pre-signed URL for uploading an object.

- Use your server generated URL on the front end to upload the file.

Check out this repo for some code.

Prerequisites

You need a Digital Ocean account. You can create a space here (it'll cost you $5 a month).

I'm working with Node.js/Express and React but the general idea applies for whatever tech you're using.

Configuring Your Space

The first thing to do is make sure you've got your Space configured properly. This entails

- Generating a Spaces access key

- Configuring CORS

Generating a Spaces Access Key

Instead of using the key/secret associated with your DO account (which has access to all DO services and would be extra bad to have compromised) you can generate an access key (not a DO token) that can only access your space. Do that here.

Just click the frigg'n button. It's easy. Copy your key and secret and put 'em somewhere safe.

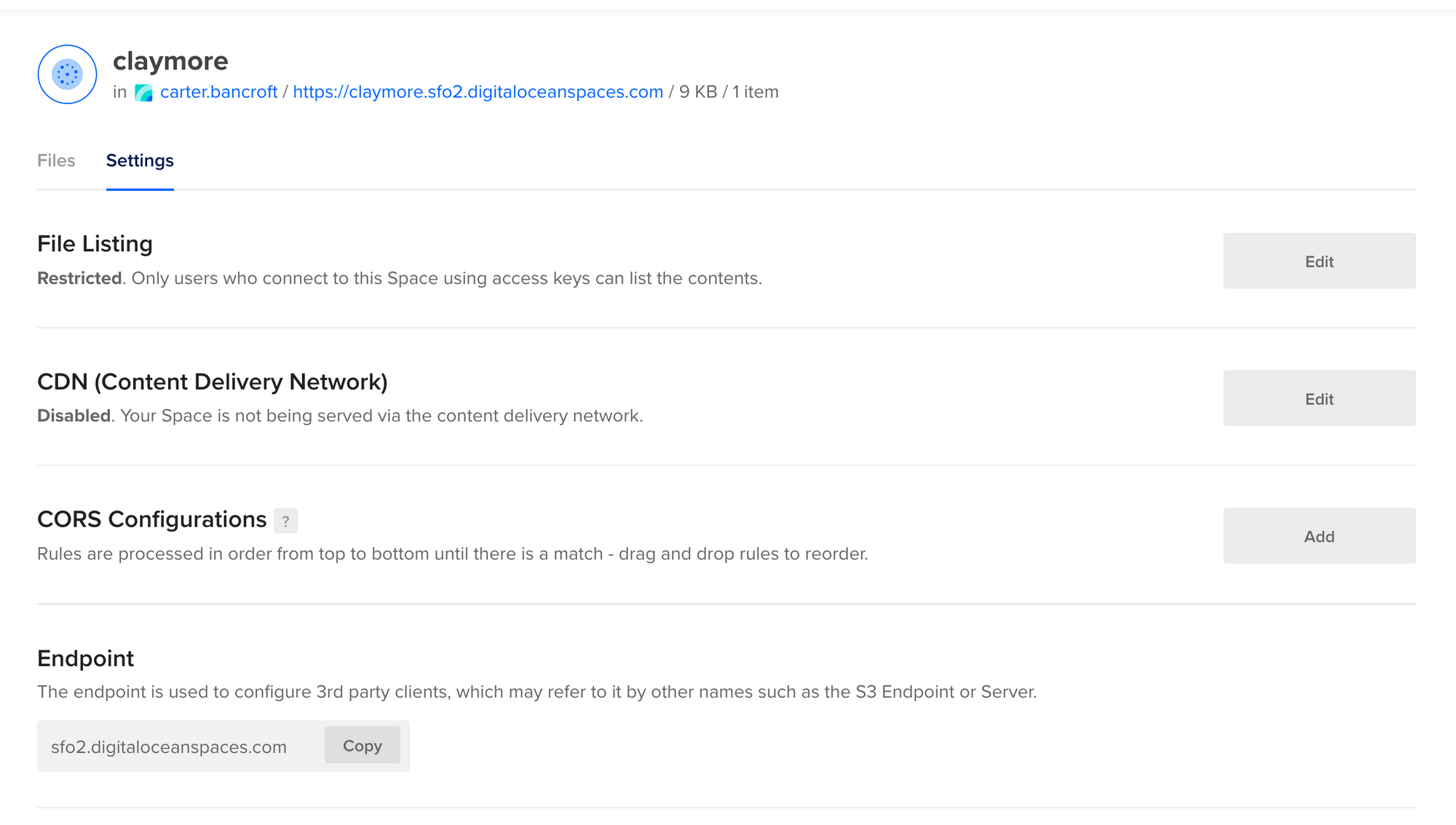

Configuring CORS

Setting up CORS (cross-origin resource sharing) basically means your client, which will be on a different domain that DO, can communicate "cross-origin" with DO. This is configured per space under your space settings.

Click "Add" next to "CORS Configuration" and you'll see a screen like so.

You'll need to add your origin (or all origins using the * operator). I'm allowing all origins for this demo but you should restrict it to only the domains that requests will be coming from.

One problem however is that the Digital Ocean UI thinks http://localhost:<port> or IP addresses are invalid domains. Stupid.Since this article is on uploads, make sure you allow PUT. I'm allowing both PUT and GET.

I'm also allowing all headers using *. If you know exactly what headers you are going to be sending with all requests you can set those here. If you get it wrong though requests will get blocked.

Finally I'm setting Access Control Max Age to 600 seconds. Who cares.

The Server

Check out the whole chunk of server code here.

To interact with your Space we can use the Node AWS-SDK. As I mentioned, DO decided to use the exact same API for Spaces as S3.

const aws = require('aws-sdk')

const spaces = new aws.S3({

endpoint: new aws.Endpoint(config.spaces.url),

accessKeyId: config.spaces.accessKeyId,

secretAccessKey: config.spaces.secretAccessKey

})Now in your Express server what you need is an endpoint like this:

app.post('/presigned_url', (req, res) => {

const body = req.body

const params = {

Bucket: config.spaces.spaceName,

Key: body.fileName,

Expires: 60 * 3, // Expires in 3 minutes

ContentType: body.fileType,

ACL: 'public-read', // Remove this to make the file private

}

const signedUrl = spaces.getSignedUrl('putObject', params)

res.json({signedUrl})

})getSignedUrl is pretty much the key to this entire article. It generates a URL for a specific operation (in our case putObject) that can be used to upload a file without having access to any secret tokens.

Note that getSignedUrl doesn't actually make any requests to Digital Ocean to generate the signed URL, it all happens on your server so no mocking or stubbing is necessary when testing.The params object we pass into getSignedUrl is important here. A lot of errors stem from these parametes not matching what's included in the upload request.

Finally, add an expiry. These signed URLs should be short lived so they can't be re-used.

The Client

Here's some real deal client code if you want to cut to the chase.

The two key parts are:

- Getting the Signed URL from your server

- Uploading the file with said signed URL

Getting The Signed URL

const getSignedUrl = async () => {

const body = {

fileName: file.name,

fileType: file.type,

}

const response = await fetch(`${API_URL}/presigned_url`, {

method: 'POST',

body: JSON.stringify(body),

headers: {'Content-Type': 'application/json'}

})

const {signedUrl} = await response.json()

return signedUrl

}This is just a fetch to your endpoint, but note the request body. To getSignedUrl that function requires a key and a content type, among other things. Make sure you send everything your endpoint needs to properly get a signed URL.

In my example I'm using the file name as the key.

Uploading The File

const uploadFile = async signedUrl => {

const res = await fetch(signedUrl, {

method: 'PUT',

body: file,

headers: {

'Content-Type': file.type,

'x-amz-acl': 'public-read',

}

})

return res

}This stuff needs to match what you included for the signed URL, specifically:

- Since we signed for

putObjectrequests the method needs to bePUT. - The

Content-Typeheader needs to match the content type we included in thegetSignedUrlcall. - If you signed for

ACL: public-readyou'll need to add the'x-amz-acl': 'public-read'header.

Pitfalls

The likliest error points revolve around:

- Not matching the params in your upload call to how you signed your URL.

- Not having CORs properly configured on your space.

Make sure you got this shit right. Again look at my example for how the code should be at it's simplest.

POSTing instead of PUTing or GETing

If you want to POST to Digital Ocean (or S3) directly from a form without shortcutting the form's submit logic there's a POST version of getSignedUrl called createdPresignedPost.

Here's the Node AWS SDK docs for it.

Now you know. Enjoy!